We have seen, over the last two decades, or maybe since the invention and widespread use of the smartphones, how AI subtly came into our lives … to stay! Examples are innumerable. From our calendar, warning us of upcoming meetings, to Amazon Echo, Google Home, and Siri, our lives are now, in a certain way, deeply embedded and guided by all kinds of apps and devices, using AI. Well… as famous Canadian philosopher Marshall McLuhan who said:

“We become what we behold. We shape our tools and then our tools shape us.”

So, even if we don’t devote much time to think about how the world and us in the world, is changing at rapid speed, and becoming more and more deeply embedded in complex technology, what is going on is profoundly impacting what we understand of being human.

In a certain, its always been like this. We just need to remember what is at the root of the word technology. The word stems from “techne”, art or craft, co joined with “logos”, which means the word, discourse. The putting into practice, into embodiment, of “discourse” of words. In a certain way, that is the history of civilization, and examples abound: the invention of fire, of agriculture… or the notion of a pencil, as an instrument for writing, a technological tool expanding our human capabilities, just as the keyboard does, or the paper calendar, where you write down all your appointments.

So as we design technology, technology design us back. But as technology evolves and becomes more complex, new issues arise. Until now, technology was clearly understood as that moment when design meets consciousness, the consciousness of a human. Nowadays, though, technology is so powerful and quick, that the frontiers of who is doing what are becoming blurred. Some speak about this as a technological singularity (also, simply, the singularity), which is the hypothesis that the invention of artificial superintelligence (ASI), where computers are actually more intelligent and faster then humans, will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization and a redefinition of what it is to be human…

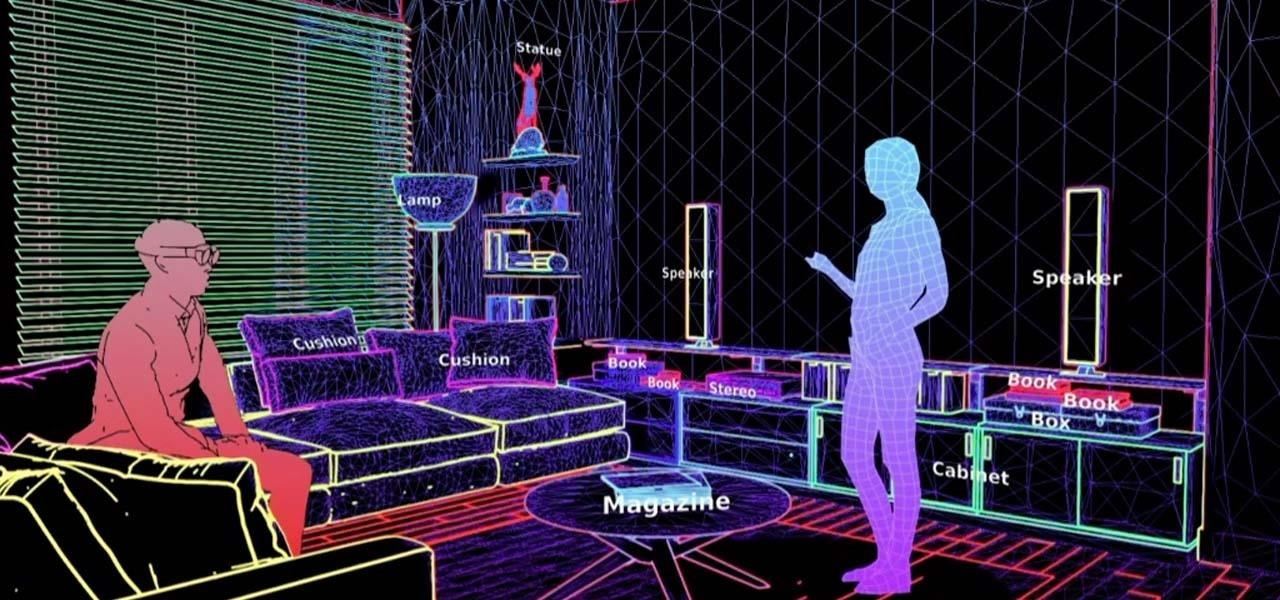

This is hitting home particularly with the recent developments happening in the field of deep learning neural networks. One thing is automation, another is intelligent machine learning, another is what people call, Mixed Reality. Mixed reality is also referred to as hybrid reality, and it is the merging of real and virtual worlds, to produce new environments and visualizations where physical and digital objects co-exist and interact in real time. Mixed reality takes place not only in the physical world or the virtual world but is a mix of reality and virtual reality.

A company working with Mixed Reality is Magic Leap, that recently introduced to us Mica, at LEAPcon, last October 2018. Mica is a virtual girl, very human-like, that doesn’t talk but interacts with you through body language. She smiles, averts her gaze, stares at you, and yawns. Mica looks – and acts – like a human being.

Of course, to see Mica, the virtual girl that doesn’t talk, you do need to use a headset, the company’s “magic leap one”.

Mica was developed by Magic Leap, an American startup company that developed a head-mounted virtual retinal display, called Magic Leap One. Their headset superimposes 3D computer-generated imagery over real world objects, by “projecting a digital light field into the user’s eye”. The possible applications of their technology, are in augmented reality and computer vision.

Magic Leap was founded by Rony Abovitz in 2010, and even though they weren’t able to produce anything tangible until late 2017, they were able to raise $1.4 billion from a list of investors including Google and China’s Alibaba Group. In December 2016 Forbes estimated that Magic Leap was worth $4.5 billion. On December 20, 2017 the company announced its first product The Magic Leap One Creator Edition, and now, they finally presented to us Mica.

Adario Strange’s had the chance of meeting Mica at LEAPcon, and wrote an interesting review of his experience in Next Reality.

Upon donning the Magic Leap One, I’m greeted by a virtual woman (Mica) sitting at the very real wooden table. Then, Mica, with an inviting smile, gestures for me to join her and sit in the chair opposite her. I oblige, and then a very weird interaction begins — she starts smiling at me, seemingly looking for a reaction. . .

. . . I’ll admit, I deliberately avoided smiling (though it was really hard, Mica seems so nice) and kept a poker face in an attempt to see if I could somehow throw the experience off by not doing the expected, that is, returning the smile.

Undaunted, Mica continued to look into my eyes and go through a series of “emotions” that, surprisingly, made me feel a bit guilty about being so stoic.

In his description, Adario Strange tells us how he suddenly found himself rearranging objects in the real world, induced to do it by Mica:

That would have been enough to mildly impress me, but what came next was the kicker. She then pointed to a real wooden picture frame on the table, gesturing for me to hang it on a pin on the wall next to us. I did as asked, and… it was the eureka moment. This was a virtual human sitting at a real world table and she just got me to change something in the real world based on her direction.

But then it got better. Once I’d hung the empty frame, Mica got up (she’s about five feet six inches tall) and began writing a message inside the frame, which in context looked about as real as if an actual person had begun writing on the space.

Alas, I don’t remember what the message was (honestly, I was too blown away by what was happening), but I’m assuming it was somewhat profound, as Mica then looked to me in a way that seemed to ask that I consider the meaning of the message. After a few beats, the life-sized, augmented reality human walked out of the room. But she didn’t just disappear into a wall in a flurry of sparkly AR dust. Instead, she walked behind a real wall in the room leading to a hallway. It was a subtle but powerful touch that increased the realism of the entire interaction.

The ethical challenges opening up through the example of Mica are evident. What kind of world are we designing if we have now, AI contextualized in human form, that looks at us, that reacts to our movements, responds to our gaze, and tells us to do things? How will we be designed back and what will this mean to the concept of being human… or post human?

Difficult and complex questions. And even if only the future will answer these questions, it is mandatory to ask them, and trigger debates.

Maria Fonseca is the Editor and Infographic Artist for IntelligentHQ. She is also a thought leader writing about social innovation, sharing economy, social business, and the commons. Aside her work for IntelligentHQ, Maria Fonseca is a visual artist and filmmaker that has exhibited widely in international events such as Manifesta 5, Sao Paulo Biennial, Photo Espana, Moderna Museet in Stockholm, Joshibi University and many others. She concluded her PhD on essayistic filmmaking , taken at University of Westminster in London and is preparing her post doc that will explore the links between creativity and the sharing economy.