This is part 2 of a Guide in 6 parts about Artificial Intelligence. The guide covers some of its basic concepts, history and present applications, its possible developments in the future, and also its challenges as opportunities.

The History of AI

Where did artificial intelligence begin? The history of AI is considered by many to date back to World War II, and the work of Alan Turing. Turing was a renowned computer scientist who worked with his team to find a way to decipher the code that the German military was using to send secure messages. This is better known as “Enigma”. The team worked tirelessly and ultimately created a machine that could crack the code. In undertaking this work, Turing considered whether it was mathematically possible to deliver artificial intelligence to allow machines to solve problems and make decisions. He developed a paper in 1950 to this effect, but at that time, computers were insufficient to actually deliver this.

However, it was not long until other mathematicians and scientists began considering the possibilities. Allen Newell, Cliff Shaw and Herbert Simon developed the Logic Theorist during the 1950s. This was a programme that could work to solve problems in the same way that people do.

Meanwhile in 1956, John McCarthy ran a conference in Dartmouth. This is believed to be the place where the term ‘artificial intelligence’ was coined. After this, research began in earnest to try to bring this concept into reality, with research programmes being set up in different countries to try and accomplish it.

AI in its Early Days

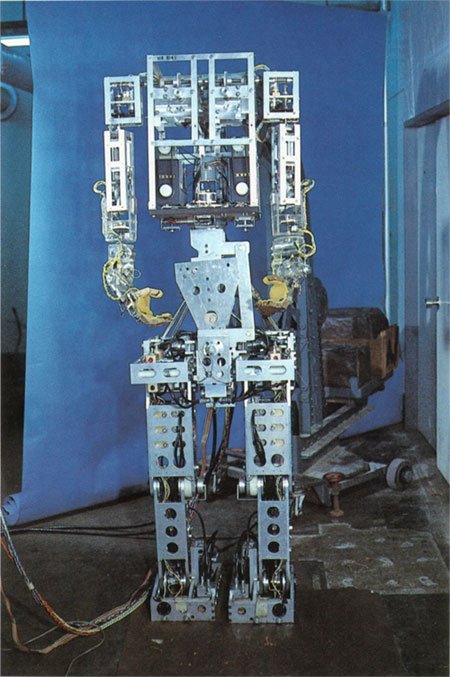

Between the mid-1950s and the mid-1970s, a good deal of progress was made with artificial intelligence. This was not least down to the fact that computers were better. They could hold a lot more information in storage, and they were more affordable. They also ran a lot faster, making AI a more practical possibility. This lead to machine learning algorithms being produced and improved, and knowledge about how to do this increasing. During the 1960s, developing algorithms to address problems in mathematics was a particularly important area of focus. In the early 1970s (1972), WABOT-1 came into being as a result of all the hard work. WABOT-1 was a humanoid robot considered to be the first of its kind, in that it was intelligent, and utilised machine learning.

However, it was still proving hard to bring about machines that could use artificial intelligence effectively. A lot had been invested in AI, and the lack of results was leading to frustration, both in organisations and in governments. This led to a period of time where less funding was available for artificial intelligence, which has been termed the “AI Winters”. Part of the problem was although computers were better, they still were not capable of either the storage needed or the processing speeds to offer any value in terms of artificial intelligence.

The Birth of Machine Learning

Interest in AI was rekindled in the 1980s. John Hopfield and David Rumelhart were responsible for developing deep learning techniques which enabled programmes to learn from ‘experience’. Meanwhile, Edward Feigenbaum also developed a system which could copy the way that humans made decisions. The Japanese found this latter concept particularly interesting and invested heavily into it between 1980 and 1990. However, the goals of the project largely did not come to fruition. Nonetheless, the learning helped with further development of AI later on.

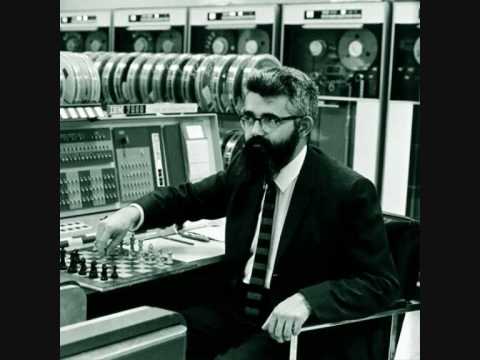

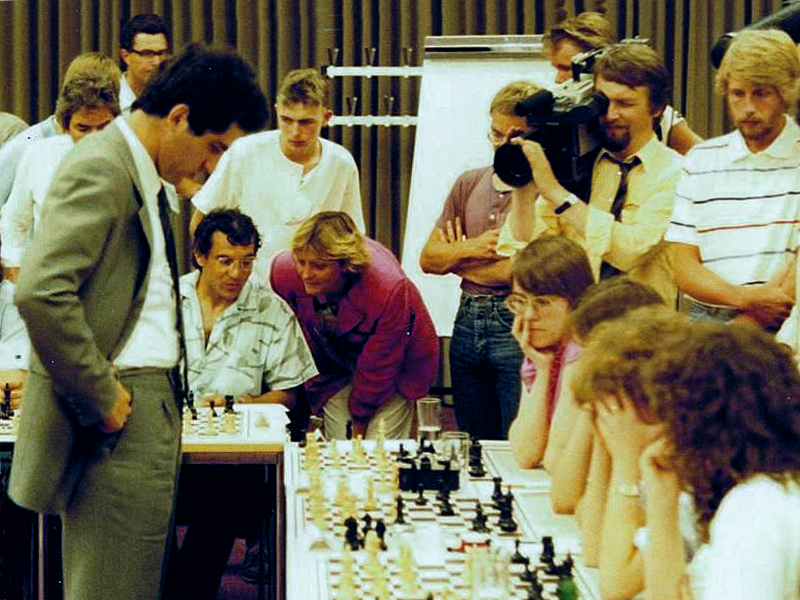

In the 1990s, companies became interested in AI again. By 1997, IBM had developed an AI system known as ‘Deep Blue’ which was able to play chess at a world championship level, beating the champion at that time (Garry Kasparov). Speech recognition software also hit the market in the 1990s, and it was increasingly believed that AI could do more. A robot was even developed that was able to recognise and show emotions. This was called Kismet, and it was built by Cynthia Breazeal.

How is AI doing now ?

More recently, AI developments have been moving along a lot faster. This is partly based on the learnings of the past, but also largely due to technological developments. Computers now have vastly improved processing power, and storage power that is much greater than it was in the past, plus quantum computing is evolving at rapid speed. This means that machine learning has been developed to a much greater extent because it can leverage a much greater volume of data. This has allowed companies to use machine learning and artificial intelligence to better understand customer behaviour and decisions. Companies such as Amazon and Google have been particularly forward in developing these technologies.

Part 1

Part 2

Part 3

Part 4

Part 5

Part 6

Paula Newton is a business writer, editor and management consultant with extensive experience writing and consulting for both start-ups and long established companies. She has ten years management and leadership experience gained at BSkyB in London and Viva Travel Guides in Quito, Ecuador, giving her a depth of insight into innovation in international business. With an MBA from the University of Hull and many years of experience running her own business consultancy, Paula’s background allows her to connect with a diverse range of clients, including cutting edge technology and web-based start-ups but also multinationals in need of assistance. Paula has played a defining role in shaping organizational strategy for a wide range of different organizations, including for-profit, NGOs and charities. Paula has also served on the Board of Directors for the South American Explorers Club in Quito, Ecuador.